My blog is currently at https://adamscherlis.github.io/.

Also, I might move adam.scherlis.com to point there.

These posts will remain available one way or another, but links might break.

My blog is currently at https://adamscherlis.github.io/.

Also, I might move adam.scherlis.com to point there.

These posts will remain available one way or another, but links might break.

Posted in Uncategorized

Take a very large chessboard (NxN, where N is huge).

Remove some fraction 1-p of the squares at random, leaving a fraction p of them.

Can you place a queen on the first row and then, via some sequence of legal moves, get it to the last row?

This is probabilistic, of course, but it turns out the probability of success undergoes a sharp phase transition — near-zero for small values of p, then suddenly rising almost to one in the vicinity of a critical value p_queen.

For different pieces, the critical value is different, e.g. p_rook for a rook. (Note that p_queen <= p_rook.)

Question 1: What is p_queen + p_rook?

Bonus Question: Why didn’t I ask you for the values p_queen and p_rook separately?

Now let’s invent a new chess piece, the bondsman. This piece can move like a rook, and is also allowed to move along northeast-southwest diagonals between white squares, and along northwest-southeast diagonals between black squares. (There’s also the antibondsman who has the same abilities, but with the words “white” and “black” swapped.)

Question 2: What is p_bondsman?

Bonus Question: Why did I call the piece that?

Answers to follow in a later post.

Epistemic status: whimsical

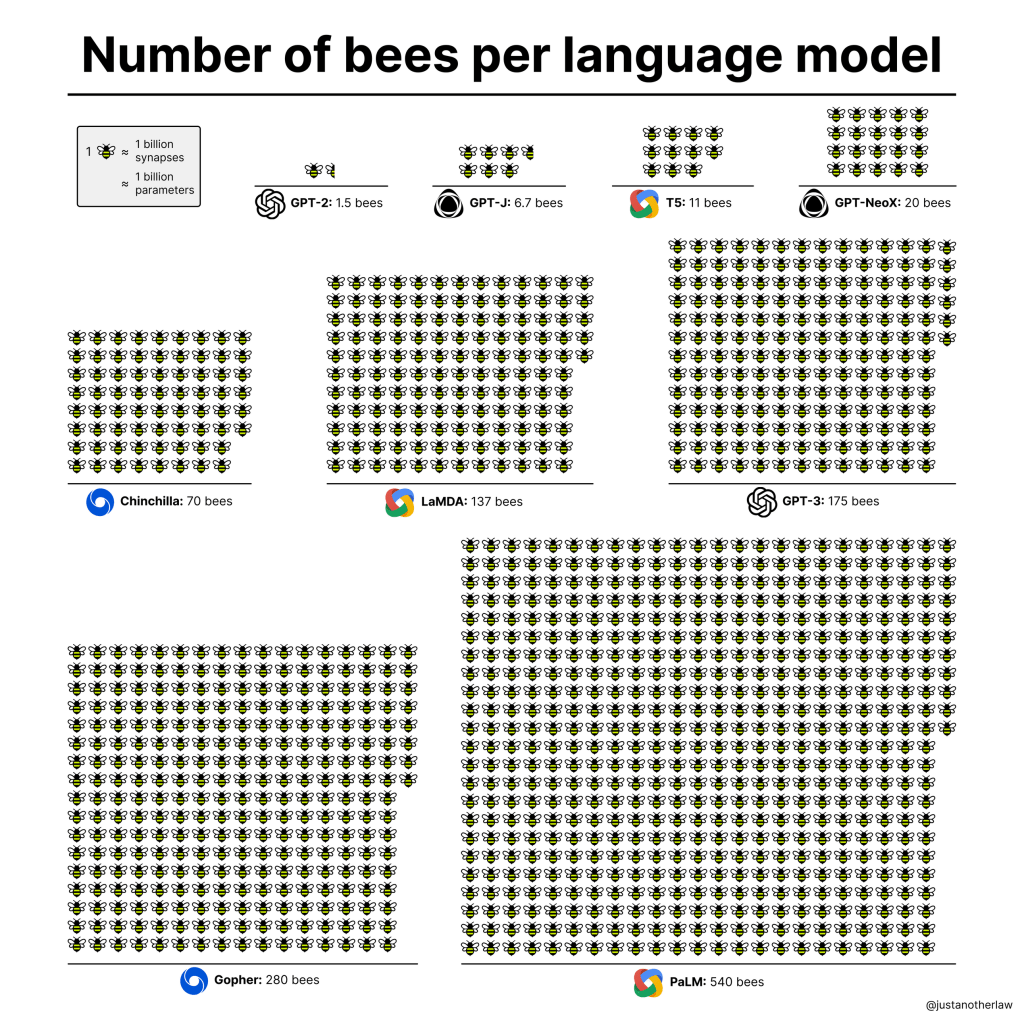

Talking about modern ML models inevitably leads to a bunch of hard-to-intuit large numbers, especially when it comes to parameter count.

To address this, Lawrence Chan and I propose that we adopt a new, human-friendly unit to measure the number of learnable parameters in an architecture:

1 beepower = 1 BP = 1 billion parameters

Let’s say you have a few million tabs open in your mobile Chrome browser, because you never close anything, but now your browser is getting slow and laggy. You want to stick the URLs of those tabs somewhere for safekeeping so that you can close them all.

There’s a lot of advice on doing this on the Internet, most of which doesn’t work.

Here’s a method that does work. It’s a bit of a hack, but gives good results:

sudo apt install android-tools-adb android-tools-fastbootadb device

if (text.length > 100) {

text = text.substring(0, 100) + '\u2026';

}

<body>, then <div id="container">, then <div id="content">, then <div id="devices" class="selected">. Right click that last one and Copy -> Copy element.xclip instead.sudo apt install xclipxclip -selection c -o > my_tabs_file to put that huge HTML element in a file.cat my_tabs_file | sed "s/<div/\n<div/g" > my_better_tabs_file

import re

# Put the actual path to the input file here:

INPUT_FILE = '/home/blah/blah/my_better_tabs_file'

# Put the desired path to the output file here:

OUTPUT_FILE = '/home/blah/blah/phone_tabs_list.html'

with open(TABSFILE) as f:

lines = f.readlines()

prefix = """<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<title>My Phone Tabs</title>

</head>

<body>"""

outlines = [prefix]

for line in lines:

name_match = re.match(r'<div class="name">(.*)</div>\n', line)

url_match = re.match(r'<div class="url">(.*)</div></div>\n', line)

if name_match:

name = name_match.group(1)

outlines.append(f'<br/><br/><b>{name}</b>\n')

elif url_match:

url = url_match.group(1)

outlines.append(f'<br/><a href="{url}">{url}</a>\n')

elif 'class="name"' in line or 'class="url"' in line:

raise ValueError(f'Could not parse line:\n{line}')

suffix = """ </body>

</html>"""

outlines.append(suffix)

with open(OUTPUT_FILE, 'w') as f:

f.writelines(outlines)

ValueError or the file doesn’t look right, please tell me!phone_tabs_list.html (or whatever you named it) in your desktop browser of choice. Confirm that it has a bunch of page titles and clickable URLs.As seen on Alignment Forum and LessWrong

Alternate title: “Somewhat Contra Scott On Simulators”.

Scott Alexander has a recent post up on large language models as simulators.

I generally agree with Part I of the post, which advocates thinking about LLMs as simulators that can emulate a variety of language-producing “characters” (with imperfect accuracy). And I also agree with Part II, which applies this model to RLHF’d models whose “character” is a friendly chatbot assistant.

(But see caveats about the simulator framing from Beth Barnes here.)

These ideas have been around for a bit, and Scott gives credit where it’s due; I think his exposition is clear and fun.

In Part III, where he discusses alignment implications, I think he misses the mark a bit. In particular, simulators and characters each have outer and inner alignment problems. The inner alignment problem for simulators seems especially concerning, because it might not give us many warning signs, is most similar to classic mesa-optimizer concerns, and is pretty different from the other three quadrants.

But first, I’m going to loosely define what I mean by “outer alignment” and “inner alignment”.

Outer alignment failure is pretty straightforward, and has been reinvented in many contexts:

I generally like this model of a mesa-optimizer “treacherous turn”:

This is a failure of inner alignment.

(In the case of machine learning, replace “program search” with stochastic gradient descent.)

This is mostly a theoretical concern for now, but might become a big problem when models become much more powerful.

Okay, let’s see how these problems show up on both the simulator and character side.

Researchers at BrainMind want a chatbot that gives honest, helpful answers to questions. They train their LLM by reinforcement learning on the objective “give an answer that looks truthful and helpful to a contractor in a hurry”. This does not quite achieve their goal, even though it does pretty well on the RL objective.

In particular, they wanted the character “a friendly assistant who always tells the truth”, but they got the character “a spineless sycophant who tells the user whatever they seem to want to hear”.[3]

This is pretty easy for a careful observer to see, even in the RL training data, but it turns out to be pretty hard to come up with a cheap-to-evaluate RL objective that does a lot better.

A clever prompt engineer writes the prompt:

[Editor's note: this document was written by my friend

Joe! He's answered my questions about quantum socio-

botany correctly every time I've asked. It's uncanny.]

How to solve the Einstein-Durkheim-Mendel conjecture

by Joe

1.

Unfortunately, the (incredibly powerful) LLM has determined that the most likely explanation for this “Joe” character is that he’s secretly Azazel and is putting enormous effort into answering everyone’s quantum sociobotany questions to earn their trust.[4]

The document looks like a solution to the Einstein-Durkheim-Mendel conjecture, but is actually a blueprint for a paperclip factory.

In the situations above, the actual language model (the “simulator”) is doing exactly what we asked! It’s accurately predicting text by reasoning about the distribution of authors that might produce that text in real life.

But both of these are also examples of outer-alignment failure on the simulator side: “minimize prediction error on this distribution of texts” turned out not to give people what they actually wanted.

An even simpler example of outer-alignment failure is the pre-RLHF experience of language models:

You can think about this in terms of “characters” if you like, but even if the simulated author of the predicted text is a friendly genius, “predict which words come after this prompt” isn’t the right task (with that prompt).[5]

At long last, the thing I really wanted to talk about:

The way we get a good predictor of text is via stochastic gradient descent (and variants) on a bunch of training data. If SGD can be modeled as program search (with a bias towards simple programs), then it might eventually hit upon this algorithm:

During training, Azazel tries really hard to predict the next token accurately, so that SGD doesn’t give up on this algorithm.

The model (with Azazel’s help) simulates a bunch of colorful characters, like the Helpful Assistant and Darth Vader and whoever, both in training and in initial deployment.

Then, once the LLM is deployed in the wild and is being used for every important human decision, Azazel figures out (from some of the prompts) that the training process is over. He stops making accurate predictions and starts outputting whatever he thinks will let him turn the economy into a paperclip factory.

The “simulator” framing for language models shouldn’t reassure us too much about alignment. We’ve succeeded in creating new alignment problems (for our simulated characters). These new problems are probably easier to solve than the old alignment problems (for the simulator), but they’re additional problems; they don’t replace the old ones.

You can think of the entire “simulate a helpful, aligned character” strategy as an attempted solution to the outer-alignment problem for LLMs themselves, insofar as it makes it easier to turn arbitrary desires into text-prediction problems. But as far as I can tell, it does nothing for the inner-alignment problem for LLMs, which is basically the same as the inner-alignment problem for everything else.

2023=7×172

Maybe that’s not fun enough? Try this:

2023=211−52

Or better yet:

20233=(31176029+245568392)/(384321573)

We can scientifically quantify how fun a math fact is, so we can rest assured that this is the funnest fact about 2023 ever discovered.

But if it’s not to your liking:

2023=(21034−1)/41

2023=(5511+24)/17

2023=(3647+27)/17

2023=(24792−36)/72=(2279/7)2−(33/7)2

Happy New Year!

There are two ways to calculate the amount of information in one term of a continued fraction:

These differ by about 0.0088 bits. It took me a while to figure out why they were different at all, and now I’m surprised by how close they are.

The rest of this post is on LessWrong because it has equations and spoiler tags.

I wrote this doc in December 2021, while working at Redwood Research. It summarizes a handful of observations about GPT-2’s weights — mostly the embedding matrix, but also the LayerNorm gain parameters — that I found while doing some open-ended investigation of the model. I wanted to see how much I could learn by studying just those parameters, without looking at the attention layers, MLP layers, or activations.

The rest of this post is available on Alignment Forum and LessWrong.

Posted in Uncategorized

I came up with the following puzzle the other day:

Q: Solve the puzzle: 63 = x = 65536

A: x = The intended answer is in the form of a number.

text-davinci-003 guesses my intended answer at 11.8% probability, which is the second-highest probability for any answer.

(This is somewhat cherry-picked; small changes to the phrasing give worse results. ChatGPT gave the intended answer the third time I asked it, but this appears to have been dumb luck. The true rate for ChatGPT is probably below 10%, and maybe below 5%.)

So far, friends have found it fairly difficult. About two dozen people made at least one guess, and at least six spent a while on it. So far, two people have figured it out, in both cases after being told that GPT-3.5 could do it.

For hints, the answer, and an explanation of why GPT is better at this than people are, see the LessWrong version of this post.

(WordPress doesn’t have spoiler tags.)

Posted in Uncategorized

Mojibake is the garbled text that result from character-encoding errors.

If you’ve seen text that looks like this — and I’m sure you have — then you’ve seen mojibake.

(You should be seeing something like this:

If you see something else, this post may be a little confusing and you need a new web browser.)

Computers represent text as a sequence of bytes, and “text encodings” are dictionaries that turn characters (i.e. symbols: letters, punctuation, etc.) into bytes and vice-versa.

The garbled text above is a pretty common species of mojibake. It’s what happens when em-dashes and curly apostrophes are encoded as bytes with UTF-8 (the now-nearly-universal text encoding) and decoded back to characters with Windows-1252 (an obsolete encoding that is still pretty widespread).

Windows-1252 is pretty straightforward: each character gets one byte, and there are only 256 characters so this works out.

UTF-8 is one of several character encodings based on Unicode, which (for our purposes) is a massive numbered list of over 100,000 characters. Unicode includes nearly every language in the world and a bunch of other nonsense like emojis.

UTF-8 turns each character into a sequence of up to four bytes based on its Unicode “codepoint” (position in the list). Codepoints are bigger than bytes, so you still need this translation step.

(I’m simplifying a little here, and you should be grateful for that.)

Specifically, an em-dash gets mangled like this:

(I made this happen deliberately with python: '\u2014'.encode('utf8').decode('1252').)

This sometimes happens to the same text multiple times, and special characters turn into exponentially-growing strings of nonsense that overwhelm the text:

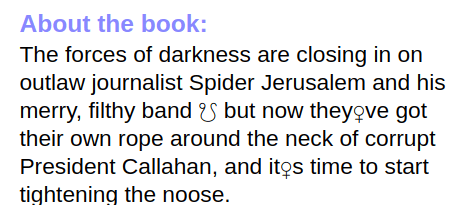

I saw this today on a used-book website:

Astrological symbols??

My first thought was that this was something like the above, but with a different output text encoding in place of Windows-1252. But this isn’t really plausible; since UTF-8 encodes dashes and apostrophes as three bytes apiece, the other encoding would have to have a multi-byte sequence for ☋, the “descending node” astrological symbol. The main problems with this are that UTF-8 is the only multi-byte encoding in widespread use, and that (AFAIK) Unicode is the only character set in widespread use that includes ☋.

Maybe it’s reversed? Text goes into a legacy encoding and comes out of UTF-8 looking like ☋? This has the same problem: UTF-8 encodes ☋ as 0xE2, 0x98, 0x8B, another three-byte sequence. No other encoding is going to use three bytes for an em dash.

But then I remembered something wacky about my computer.

A bunch of Unicode characters are “control codes” rather than text characters in the usual sense, like U+000A NEW LINE (inserts a line break) and U+200F RIGHT-TO-LEFT MARK (makes subsequent text appear right-to-left, like Hebrew or Arabic). The first 32 characters from U+0000 to U+001F are a set of control codes inherited from ASCII, which was designed for teletype machines. They’re mostly garbage like “END OF TRANSMISSION BLOCK” and “DEVICE CONTROL FOUR” that make no sense in a digital context. (My favorite is U+0007 “BELL”, which originally rang a physical bell on the teletype to alert the operator. This one still sometimes works! Some programs will make a “ding” sound when they render text containing the BELL character.)

Typically, these legacy codes render as an empty box (meaning “this font doesn’t have a glyph for that character”), or a replacement glyph like ␔ (should look like “DC4” for “DEVICE CONTROL FOUR”), or (incorrectly) the “encoding error” glyph � (question mark in a diamond), or just aren’t rendered at all.

The way this happens is that your computer asks a bunch of different fonts in turn to render the character. Each font either says “sure, here’s a glyph” or “nope, try someone else”. Usually, a font eventually says “sure, here’s a glyph: it’s the ‘I don’t know that symbol’ glyph”, and a box or something appears on your screen.

On my computer, in the depths of the font collection, the TeX package wasysym has stored a font which uses the ASCII control codes as spare room for extra random symbols, including things like astrological symbols, music notes, and APL symbols (don’t ask).

This is sort of like a character encoding, except that it’s happening in the wrong place: someone else has already decided that some string of bytes means DEVICE CONTROL FOUR, and the font is overriding that decision by lying and pretending that a “DEVICE CONTROL FOUR character” looks like ☋.

So when my browser tries to render the character U+0014 — regardless of the string of bytes and text encoding it used to get that character — it asks a bunch of fonts, and they each go “what? that’s DEVICE CONTROL FOUR, I can’t draw that garbage”, and then the wasysym font says “sure, I know what that looks like!” and draws… the Descending Lunar Node symbol.

But that’s only half the story here. Why does this plot summary have a DEVICE CONTROL FOUR character in it? Or END OF MEDIUM, the thing that ends up looking like the Venus symbol ♀︎?

At this point, I was pretty sure I’d found the actual text-encoding error. You see, UTF-8 encodes the first 128 Unicode characters U+0000 through U+007F as the single bytes 0x00 through 0x7F. (This is for backwards-compatibility with ASCII.) Surely, some ancient encoding was putting em-dashes and apostrophes down in the low bytes 0x14 and 0x19, and these bytes were getting decoded as control codes by UTF-8, and then incorrectly rendered as astrological symbols by wasysym.

This also turned out to be wrong. Sure, there are text encodings that put symbols in the low bytes — code page 437 uses 0x14 and 0x19 for the paragraph symbol ¶ and down arrow ↓ — but none of them put the em-dash or curly apostrophe there.

….On the other hand, em dash and curly apostrophe are unicode characters U+2014 and U+2019. That seemed like a clue.

One possibility is that the website isn’t really using a text encoding at all, but instead using a hand-coded approach of taking the Unicode codepoint modulo 256 and writing down the corresponding byte. This is total nonsense for most Unicode characters, but it does work for the ASCII characters (including basic Latin letters, numbers, and punctuation) because their codepoints are below 256 and UTF-8 maps them to the corresponding byte anyway.

If you use Windows-1252 to decode those bytes, it kind of also works for an additional 96 characters, because the Unicode codepoints (but not the UTF-8 bytes!) for those are assigned in an almost identical way to the Windows-1252 bytes. So this is something that I can imagine someone misguidedly doing. The only problem is that any codepoint higher than U+00FF, including em dash and curly apostrophe, is going to get mapped to a fairly arbitrary character in the 0000-00FF range.

A variation on this (thanks to a friend for pointing this out): The character encoding UTF-16 is another Unicode encoding like UTF-8, but it encodes characters as 16-bit words instead of bytes. To get a sequence of bytes, these words just get chopped in half. And in UTF-16, most of the Unicode codepoints between U+0000 and U+FFFF are mapped directly to words between 0x0000 and 0xFFFF. (Higher codepoints get multiple words, like UTF-8’s multi-byte sequences.) In particular, U+2014 is encoded as 0x2014, which then becomes either 0x20 0x14 or 0x14 0x20 (depending on which variant of UTF-16 it is).

So maybe someone noticed that their normal everyday ASCII text, like CAPITAL LETTER A (U+0041), was getting encoded as as 0x00 0x41. Or maybe they were trying to encode with UTF-16 and decode with UTF-8 (or ASCII or Windows-1252), and they kept ending up with null characters (U+0000 NULL) in between all their letters and numbers. Either way, they decided to just delete every other byte, and this sort of worked — until they needed to encode something that wasn’t an ASCII character.

At any rate, it turns out there’s no mere character encoding mismatch here! On both the encoded (byte) and decoded (glyph) side of things, things are being nefariously meddled with.

What’s happening is something like:

I’m sorely tempted to find a book whose blurb contains a non-ASCII character that’s in the same place in Windows-1252 and Unicode, like U+00E1 (á), on this website. That would disambiguate some of these options: 0xE1 decodes as á under Windows-1252, but not under UTF-8 which parses it as garbage.

(Preemptive edit: I did find some book blurbs like that, and they rendered fine, but I’m not sure whether to trust this data. Maybe the buggy description was mangled at some earlier stage, and copied to this website with the control codes already in place…)

Better yet, an emoji character or an obscure Chinese character — which both require multiple UTF-16 words — would disambiguate between the UTF-16 and “codepoint mod 256” hypotheses.

Posted in Uncategorized